Tools: Usertesting.com, Figma, Google Slides

My Role: Design testing script, conduct usability testing, analyze and present findings with recommendations

Team: Senior Director of User Experience, User Research Intern [me]

Summary:

In January 2019, I interned with Brightcove’s UX team on a short-term project for three weeks. The goal of the project was to better understand how potential Brightcove customers navigate through the registration process for free trials of the video platform products. An increased amount of users completing the registration process for free trials brings forth the potential of conversions to paying customers. During my time at Brightcove, I wrote a testing script, conducted remote, unmoderated usability tests, analyzed my findings, and presented my work to colleagues across the company.

Study Design:

In this study, I tested the trial registration for Brightcove’s three video platform products (Video Cloud, Video Marketing Suite, and Enterprise Video Suite). The study consisted of 10 participants. 7 on desktop and 3 on mobile to accurately reflect site traffic based on analytics. 2 additional mobile participants were omitted from all numerical data because they did not complete the registration. However, their comments and suggestions were included. This decision was taken because the behavior of the two participants was still beneficial as qualitative data and including any numerical data would have skewed the quantitative data. I recruited participants, administered tasks and questions, recorded testing sessions, and captured data through Usertesting.com, the chosen platform of Brightcove's UX team. Because of the brevity of this project, I was encouraged by my supervisor to conduct remote unmoderated testing. The registration process must allow users to submit their information and continue to explore the product trial. The biggest limitation of this study was the fact that I was not able to be a part of the work that that followed my research being presented. The day I presented my findings and recommendations was also the last day of my internship.

Project Goals and Questions:

‣ Evaluate how effectively users are navigating the site, especially when looking for Video Cloud, VMS, and EVS info.

Navigating a new site leaves a lasting impression. If it is frustrating for users to find what they need on your site, why would they not expect more frustration from the product. Positive experiences allow users to associate the brand with ease of use. This is why it is essential to make sure that new users can easily find information about the video platforms.

‣ Evaluate the clarity of the content on the Video Cloud, VMS, and EVS pages.

(Is it clear enough that users can decide which version best fits their needs?)

Once users find this information, it must be clear enough that they can confidently decide which version they need. Being able to articulate the differences between products and helping customers to make their decision builds trust. It also allows users to have confidence in the company early on.

‣ Are users truly choosing the version of Studio that best fits their needs?

Similar to the prior bullet, if it is clear that users are not choosing the appropriate version for their needs that means that there is still work to be done regarding how the three products are presented.

‣ Measure the usability of the registration form. [This is the final step in the registration process. Severe usability issues at this stage might result in abandoned forms.]

This was the clearest goal of the study. While most participants will continue for the sake of completing the study, usability issues can result in abandoned forms and the loss of potential customers that never get to experience the product. The design of the form must take human error into account and not be so rigid.

Challenges:

‣ Prioritizing what user needs would be most relevant to current and future Brightcove customers since the participants in this study were not potential Brightcove customers.

‣ Two participants were omitted from the study because they did not complete the registration task.

Findings:

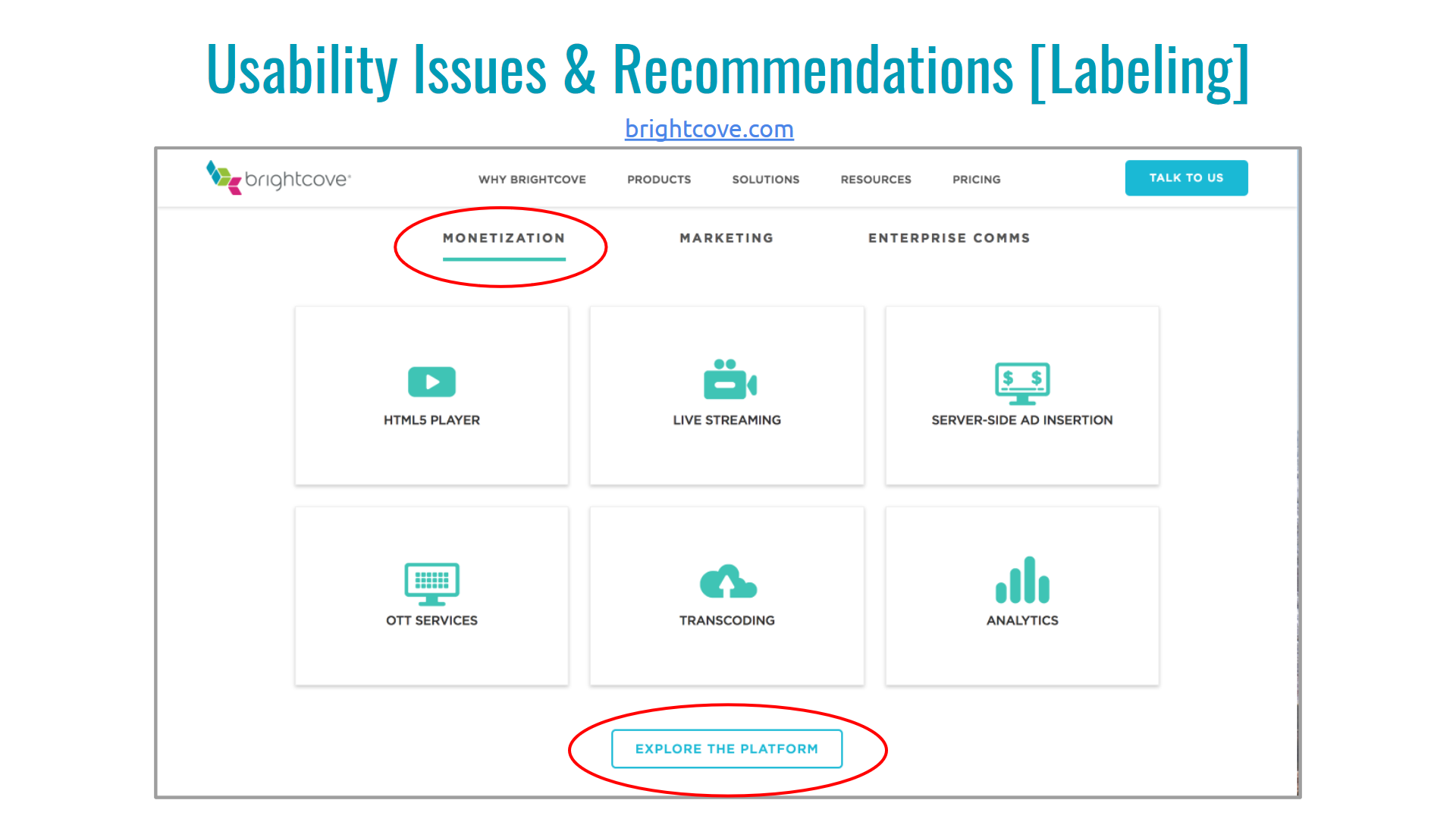

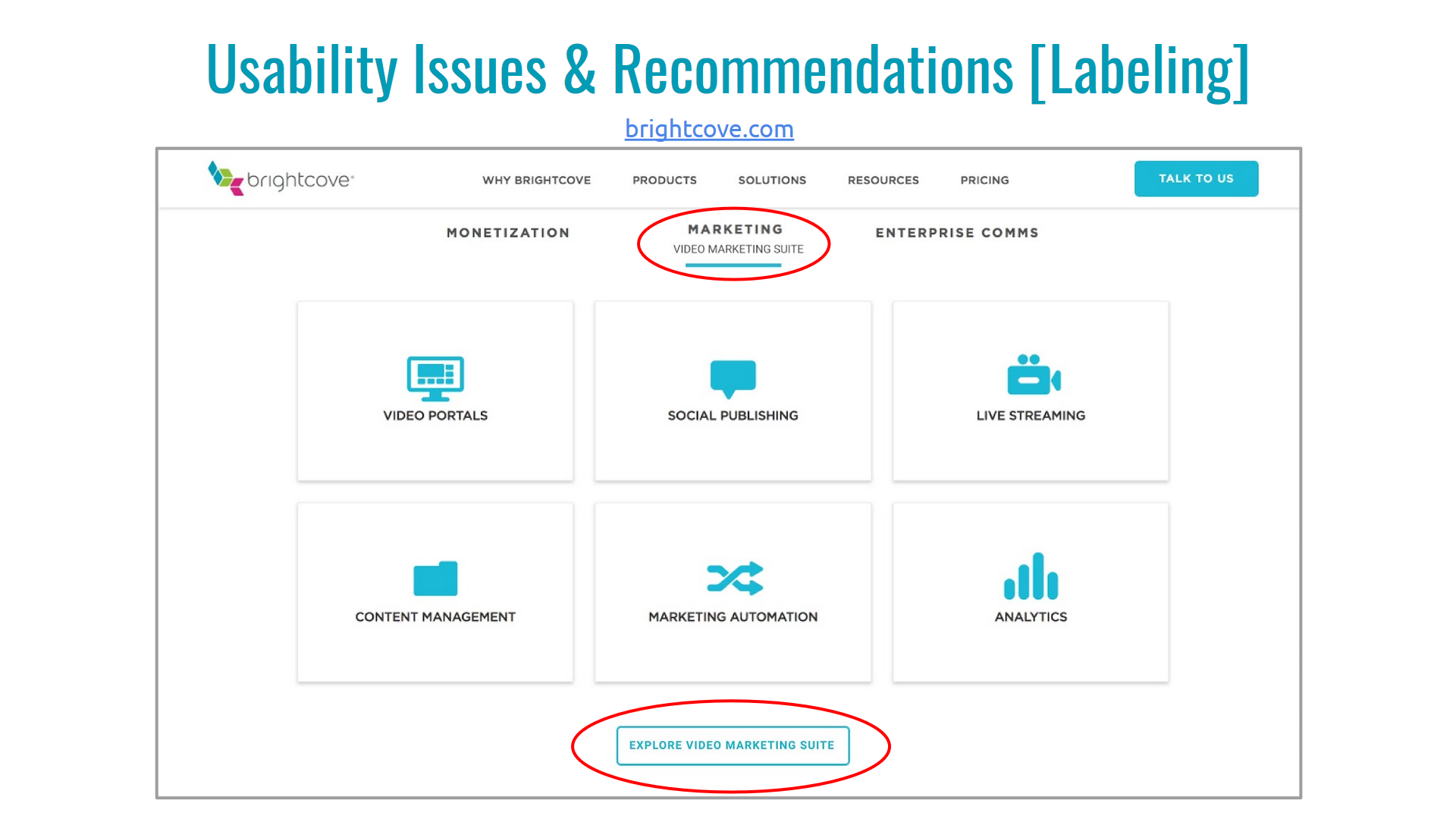

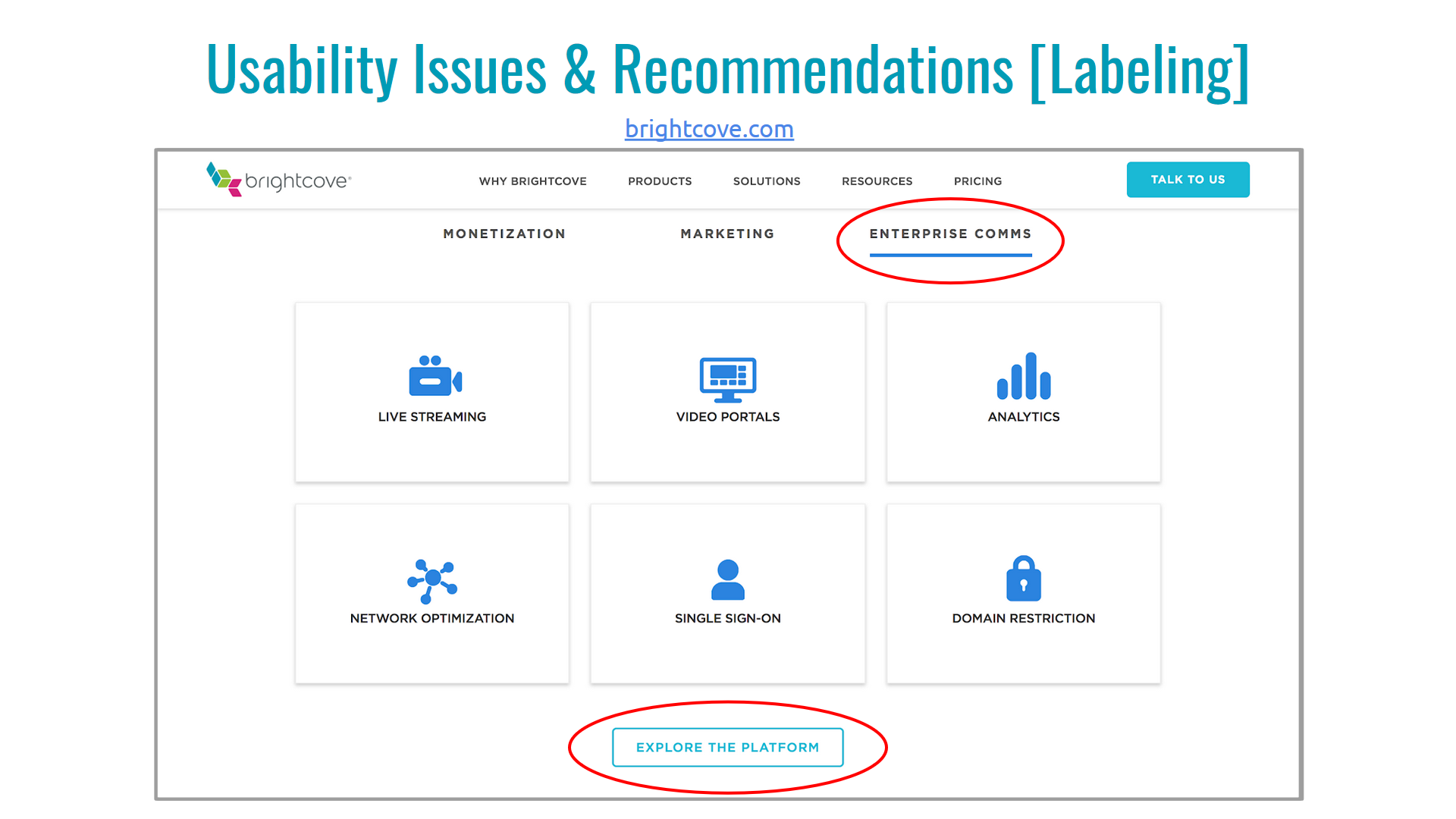

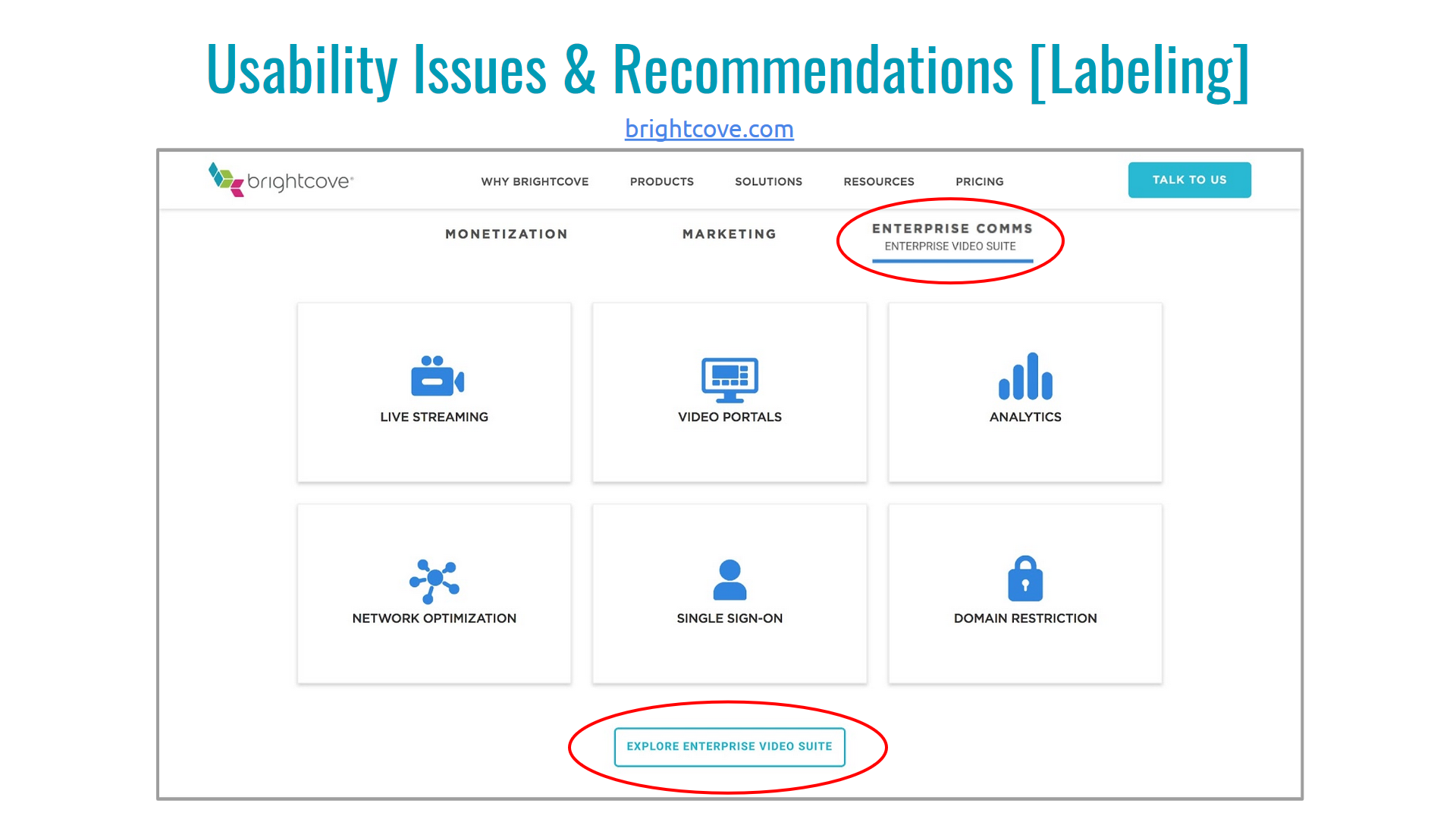

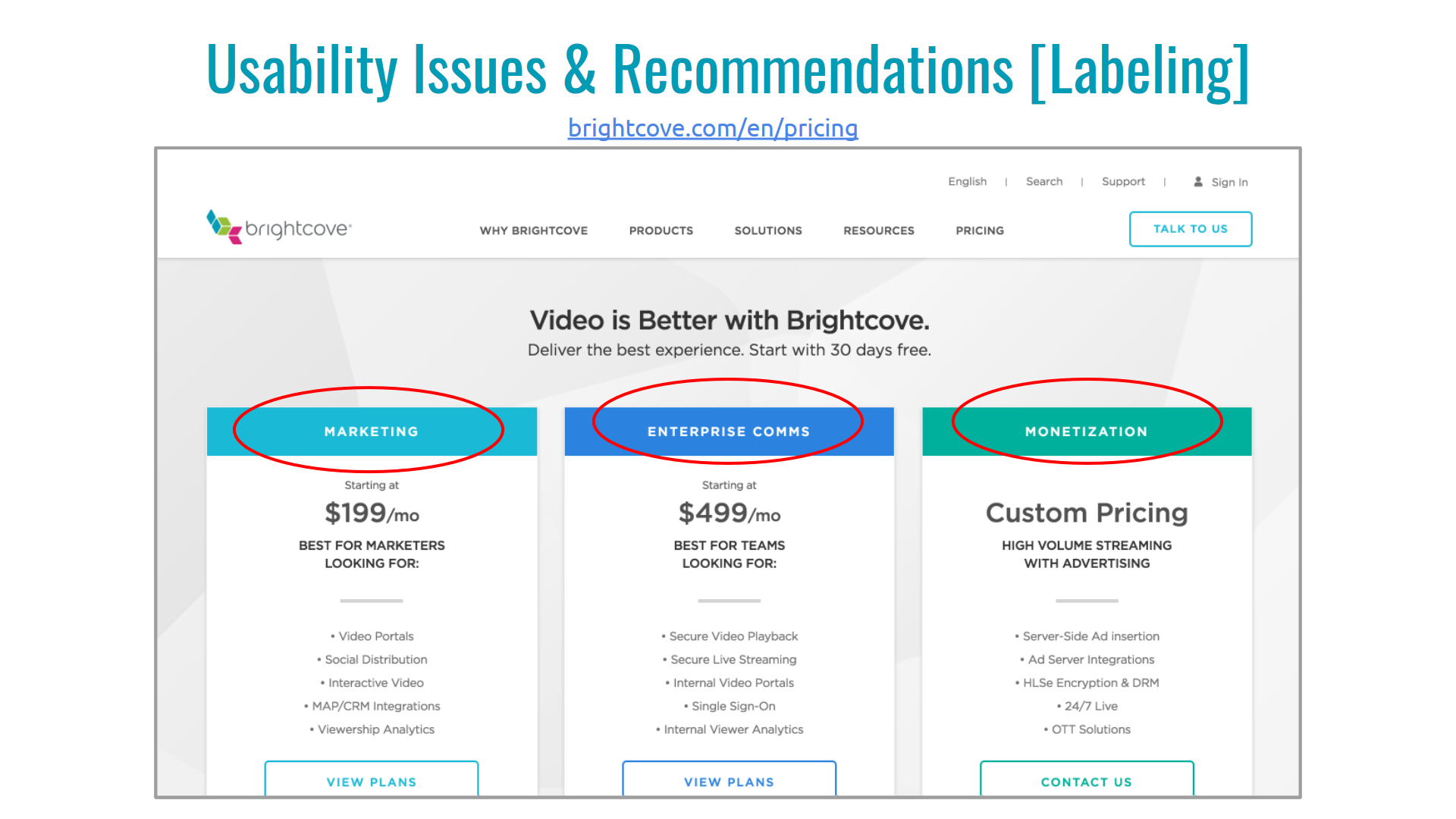

‣ Many participants were not able to tell which platform they were registering for, because of inconsistent labeling throughout the site. The language of ‘Marketing, Monetization, and Enterprise Communications’ would be much more accessible to users when combined with Video Marketing Suite, Video Cloud, and Enterprise Video Suite.

‣ The way pricing information was displayed was confusing to users.

‣ Users need to be directed to the plan that they want.

Supervisor Recommendation:

Malick Niane recently completed a user experience (UX) internship at Brightcove, completing 80 hours of work while conducting a usability study on our trial registration experience. I would like to submit the following recommendation based on my experience working with him as his direct supervisor. I first met Malick when he was still in high school. He came to Brightcove for a technology careers event and I had him participate in a usability study to gain exposure to user experience research. A few years later, he contacted me to say that the experience had changed his life, and he inquired about a UX internship. How could I say no? He had never conducted a usability study before, so I gave him Steve Krug's Don't Make Me Think and another book on remote testing, and crossed my fingers. Within three weeks, he had planned a study, set it up on our remote, un-moderated testing platform (usertesting.com), reviewed recordings from twelve participants, and analyzed and presented his findings and recommendations to a rapt audience of marketing and web professionals. He even mocked up designs for some of his recommendations. Malick was a pleasure to work with throughout the internship. He's smart, articulate, and has a great attitude. He strikes an appropriate balance between asking questions and figuring things out for himself. I would highly recommend him for an internship or entry-level job in user experience research.

– Kirsten Robinson, Sr. Director of User Experience at Brightcove

Select slides from findings report:

All slides are screenshots from the findings report. Video clips are not playable.

Screenshot from the Brightcove homescreen. Monetization represents the Video Cloud platform.

Mock up created in Figma for findings report. The homepage now specifies which platform is being selected and still uses 'Monetization' to describe the platform to potential customers.

Screenshot from the Brightcove homescreen.

Mock up created in Figma for findings report. The text in the call to action button has edited to reflect the selected platform.

Screenshot from the Brightcove homescreen.

Mock up created in Figma for findings report.

Screenshot from the Brightcove pricing page.

Mock up created in Figma for findings report. The pricing page now shows the name of each video platform and it's descriptor.

Screenshot from the Brightcove pricing page.

Mock up created in Figma for findings report. The pricing page now uses the name of the platform.

Screenshot from findings report. Understanding the pricing for the video platforms was another trouble point for participants in this study.